An Introduction to Data Ethics

This article was initially published on DataCamp on February 2, 2023.

What is the Ethical Use of Data

In September 2018, hackers injected malicious code into British Airways’ website, diverting traffic to a fraudulent replica site. Customers then unknowingly gave their information to fraudsters including login details, payment card information, address, and travel booking information.

In March 2021, over 533 million Facebook users’ data was posted on an open hackers’ forum. It was one of the largest data breaches of all time. The incident raised concerns about how organizations store and secure personal information, as well as whether they should be allowed to have access to such data in the first place.

(You can find more examples of data breaches and other consequences, here.)

The importance of data ethics cannot be overstated; it's impossible to ignore the fact that we're living in a world that increasingly depends on information. Consequently, data ethics will become an increasingly important factor for everyone—from businesses to governments—to consider when using technology for economic or personal reasons.

Data is a valuable asset that can be used to build the next great company or improve the lives of countless people. However, it is also a resource that many organizations are not keeping secure.

That's why understanding data ethics is so important—it helps us discern what we're doing with our own information and how we can protect ourselves from its misuse without sacrificing our ability to participate in society freely. It also helps us think about how we want society to function in the future, not just in our lifetimes, but for future generations when technology continues to evolve.

This is also why AI regulation is not just about protecting data; it's about protecting human beings from the unknown consequences of their own actions.

Understanding the Current & Potential Future Data Landscape

Data ethics is an issue that faces everyone, whether they work directly with the technology or not. In fact, it's something that all of us have to consider as we move forward with our lives in the digital age.

It's essential to remember that AI and machine learning are still relatively new technologies—they're not going away any time soon, but neither are they in their final form now. This is why there is a sense of urgency now regarding questions about how these technologies should be used in order to ensure that they remain ethical.

Since our lives are increasingly lived online, the line between private and public has been blurred. And while there are some legal protections for your data (such as the European Union's General Data Protection Regulation), there are also enough gray areas to make it difficult to manage your own privacy.

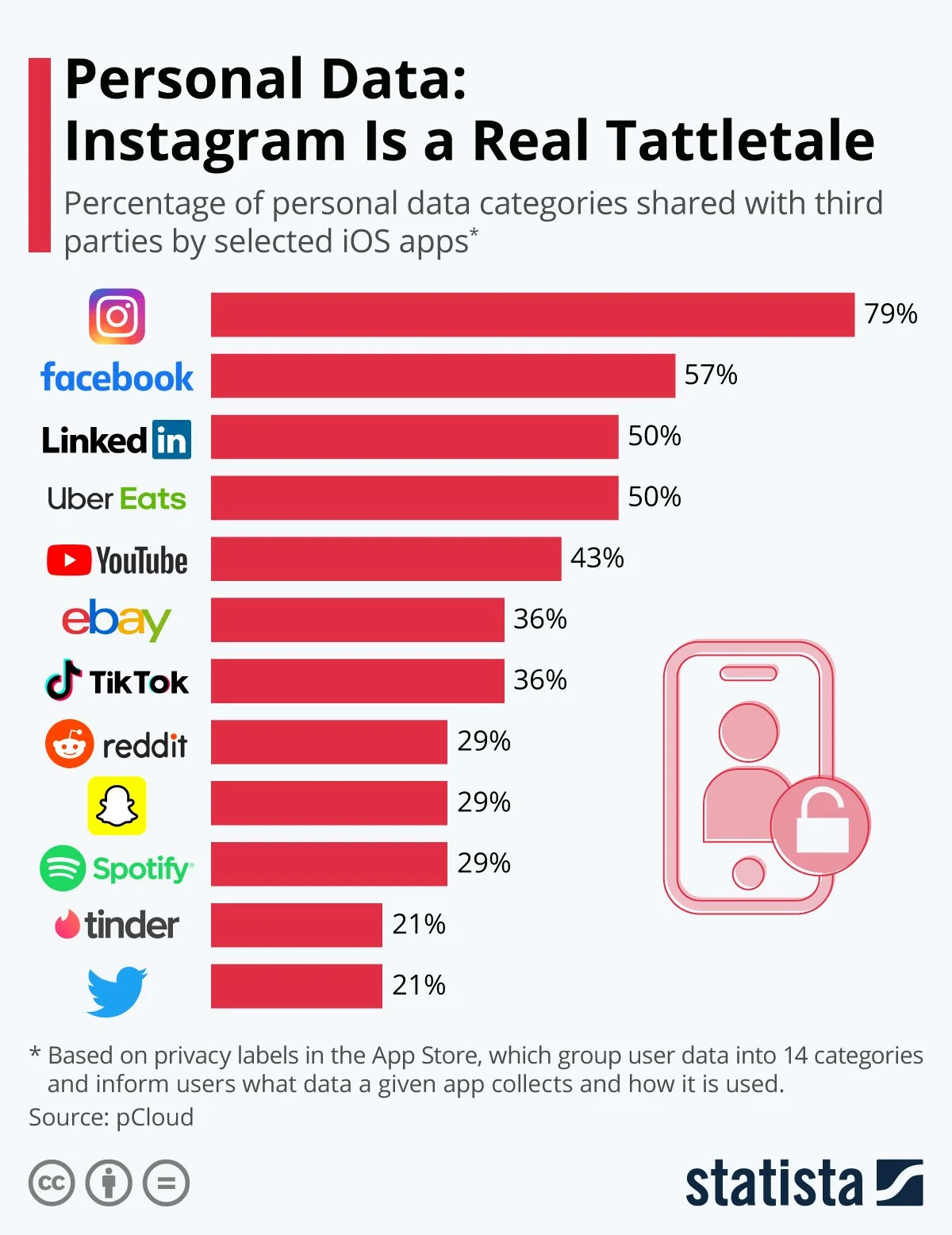

Research about popular mobile apps and social websites reveals that nearly all sell our personal information to third parties.

[Reference: Statista 2021]

And should you be a frequent user of some of these platforms, you might be surprised about exactly what data is being sold. From your email to your chat or location history, these companies gain a lot when you unwittingly let them into your life through mobile or other devices.

[Reference: Harvard Business Review]

Many organizations are developing the infrastructure which will make AI and data science possible in various industries, rendering some of this collected data even more meaningful to various organizations hoping to purchase it. At the same time, open-source tools such as DALL-E and Stable Diffusion demonstrate how quickly AI tools can improve once made widely available. With all these rapid developments, our only assurance for the future is that data privacy, security, and a sense of fairness will become more and more challenging to guarantee.

Principles of Big Data Ethics

While there is no standard method for approaching data projects - big, or otherwise - the essential aim is to translate basic human rights into the digital age. Regardless of the terms used, considering potential harm to people, organizations, and systems is the motivation behind these tenets.

Governmental risk agencies have provided some guidance on practical application. From NIST, the US technology standards organization, and DataEthics.eu comes this approach to considering challenges unique to your industry:

Harms to people:

Adverse impact to an individual’s civil liberties or physical safety, the discrimination of groups of people, or the repression of democratic participation at scale.

Harms to organizations:

Adverse impact to business operations (monetary loss, security breaches, and reputational harm)

Harms to systems:

Large-scale harms, such as to financial systems.

Some companies have also included the following values which speak directly to their offerings: good intent, data-driven decision-making, and user-driven design. Otherwise, when developing a data project, the following four principles are a clear, actionable basis for development:

Transparency

Transparency refers to clear communication of what data will be gathered, whether it will be stored, and who it might be shared with. While terms and conditions documents can be long, convoluted, and almost require legal expertise, users should have some semblance of understanding about how their information will be used by the company.

Ownership/ Accountability

Ownership and accountability refer to an organization taking responsibility for what happens to an individual’s data after they have collected it. From data leaks to sales to a third party, companies need to accept accountability for the damage their processes can cause users.

Individual Agency

Agency refers to one’s ability to make meaningful choices about personal data. Whether it’s the ability to remove themselves completely or choose what collected information should be stored, allowing individuals the choice to control what happens to their information is a human right.

Accessibility

Accessibility refers to one’s ability to access personal data that has been collected about them, and expect fair treatment free from bias or discrimination.

Applying Data Ethics

Aside from general philosophy, individual practitioners and organizational leaders must develop practices and processes to guide their everyday decisions.

Guidance for Individual Practitioners

Software, Machine Learning, or AI Engineers

The skills needed to develop software are not the same as those which can determine whether a project is ethical. This is evidenced by many examples of highly biased and unethical algorithms and software products defended by technical founders.

While software engineers can (and should) broaden their understanding of common ethical pitfalls, the key application for individual technical contributors is to collaborate with ethicists on challenging questions. Understanding that additional time and effort to work through ethical analyses of a product is not wasted, nor a delay of ‘innovation’, is the most critical shift in perspective for these professionals today.

Documentation is a best practice which will be even more critical as transparency, accountability, and accessibility practices are incorporated into your products.

Technical Project Managers

As a project manager, navigating additional requirements related to ethical safeguards will be a new challenge. Estimating time and effort related to these unprecedented tasks and developing a clear understanding of new stakeholders (researchers, ethicists, etc.) will be critical in creating meaningful outcomes for your technical team. Managing requirements and navigating scope and demands from all sides already makes project management a demanding job, but standing firm in aligning a product with ethical principles will make your role even more critical.

Product Manager

As the product manager, you may have the most hands-on …..

Today’s Challenges—Case Studies

Ethical Algorithms

Algorithms have the potential to amplify the impact of any action a million times over as they’re implemented. Even in the infancy of their capability, there are many examples of how a lack of ethical safeguards for algorithms affects us.

Hiring Algorithms at Amazon

Projects related to human resources are almost always high risk because they determine access to employment, and impacts can often be a clear threat to the livelihoods of people. However, with online applications and tools like LinkedIn, finding the right candidate while ensuring equal opportunity presents new challenges. In 2016-2017, Amazon attempted to address both of these problems with an internal hiring algorithm which would decide which resumes made it to the next stage of hiring. It wasn’t long before bias against women in technical roles became obvious. Due to existing bias in the historical data, the algorithm had taught itself to identify and single out women without prejudice.

While Amazon may have scrapped this algorithm, most HR representatives anticipate the regular use of AI tools in their hiring processes in the future.

Facial Recognition Struggles to Detect Darker Skin Tones

Facial recognition has gained widespread use not only related to accessing phones and accounts, but as a critical tool for law enforcement surveillance, airport passenger screening, and employment and housing decisions.

However, in 2018, research revealed that these algorithms performed notably worse on dark-skinned females—with error rates up to 34% higher than for lighter-skinned males.

Data Privacy

Period Tracking Apps

Since federal abortion protection, Roe v. Wade, was overturned in the US earlier this year, period tracking apps have come into focus as a potential means for prosecution in any future outright abortion bans. While most apps in general have terms that allow them to share personal data with third parties, many period tracking apps have murky language around whether data could be shared with authorities for arrest warrants.

While you might need a legal expert to interpret the terms and conditions of all the applications you use on a daily basis, understanding the trade-offs and risks you expose yourself to will only become more important.

Uber Tracked Politicians and Celebrities with “God-Mode”

In 2014, reports claimed that Uber’s “god-mode” gave all employees access to complete user data, which was often used to track celebrities, politicians, and even personal connections (such as, ex-partners). A former employee also filed a suit describing reckless behavior with user behavior and a “total disregard” for privacy.

Around the same time, in 2016, Uber attempted to cover up a major data breach by paying off hackers and not reporting the incident. Despite a pending investigation into company security by the US Federal Trade Commission at the same time.

Data Security

From inherent security flaws, to hacking vulnerabilities, data security can put more than your login info at risk. Apps and websites of all kinds can pinpoint your location, read your messages, see who you’ve blocked, and how you spend your money.

Whether you’re a social butterfly online, or just participate in minimal digital services (banking, texting, email, etc.), you’re most likely exposed in more ways than you know. The following breaches in consent show just how unprotected you may actually be.

Grindr Data Breaches

The LGBTQ dating app, Grindr, was dealt a lawsuit from the Norwegian Data Protection Authority in 2021 on the basis of illegal sales of personal user data. Unfortunately, this is not the first time data breaches and security has been an issue for the platform, which experienced highly publicized security exposures regarding the ability of any user to access precise location and other personal information of others around the world. There was essentially no protection for user data from outside apps.

Data in these exposures - and Grindr’s sales - was enough to infer “… things like romantic encounters between specific users based on their device’s proximity to one another, as well as identify clues to people’s identities such as their workplaces and home addresses based on their patterns, habits and routines, people familiar with the data said,” the WSJ reported.

Even in countries where homosexuality is not prosecuted, there are harmful biases which can impact users interested in maintaining privacy. Not to mention the threat of intimate partner violence, and _

Scripps Hospital Hack

In May 2021, the Scripps Hospital System in San Diego, California in the USA was hit with a ransomware attack. Representing five individual hospitals, data from over 150,000 patients was stolen and daily operations were halted for weeks while networks were taken offline. This was one of the first data breaches which included sensitive medical data, and represents many of the challenges the healthcare system faces as many practices begin to digitize their operations.

Impacted patients files several class action lawsuits claiming the company did not invest in enough protection for patient data. Besides having sensitive medical data stolen, patients lost access to the MyScripps portal, which facilitated prescription renewals, and other critical care services.

Current Regulations

Many people are aware of the importance of data ethics—but what does it mean to us, as consumers? What does it mean for companies? What does it mean for government agencies? The answer to these questions depends on where you live. In some countries, there are already laws in place requiring companies to be transparent about how they handle personal information; in others, there aren't any regulations at all.

And then there are the ethical questions we face every day: How much do we share with each other online? Should we be sharing less? And what happens when something goes wrong? These are all pressing issues that require thoughtful consideration and strong leadership from both government and industry leaders so that our future isn't threatened by unethical behavior or lack thereof.

Around the world, governments are trying new methods of regulating data collection, AI, and various uses for data (ex: targeted marketing, etc.).

Here are a few regulations worth knowing and following to understand the current state of data regulation where you live:

Data Protection

GDPR (European Union)

CCPR, CCPA (California)

AI Use

EU AI Act

NY AI regulation

Platform Regulation

Digital Services Act (DSA)

Many of these policies are new and ….

Continued Education

Certifications for Professionals

Data Privacy

IAPP Certified Information Privacy Manager (CIPM) certification

Securiti PrivacyOps certification

ISACA Certified Data Privacy Solutions Engineer (CDPSE) certification

IAPP Certified Information Privacy Professional (CIPP) certification

Data Security

CompTIA Security+

Certified Ethical Hacker (CEH)

GIAC Security Essentials Certification (GSEC)

Offensive Security Certified Professional (OSCP)

Accessibility

Data Camp is also developing courses on data ethics, literacy, governance, and more. Keep an eye out for these updates!